In November 2022, ChatGPT was released. The generative AI chatbot unleashed an unprecedented whirlwind of news, think pieces, and technology releases. It sent shock waves through formal education due to the threat it poses to established methods of assessment. AI has absolutely dominated the tech and education news cycles, seeping into every feed that I followed, and become a household name fast. Once I even saw someone with a "ChatGPT" license plate.

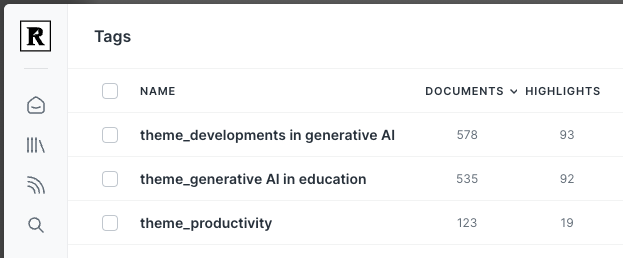

As anyone who has talked to me in the last year would know well, maybe with an eye-roll, I've been a bit obsessed with following AI. Below is a screenshot of the top document tags in my read-it-later app (Readwise Reader). It shows articles and videos that I've saved and tagged by theme since I started using the app at the end of 2022. I've read or watched more than half of the saved content in each of those tags - hundreds of pieces - all trying to make sense of generative AI and its impact.

Honestly, the sheer volume of news and takes has been pretty overwhelming at times. But as we close out the year and I think back over all the things I watched and read, there are many high-signal pieces that stand out from the noise.

In this post I'd like to retrace the path they laid, like stepping stones through the year, to see what story they tell about our collective efforts at sense-making what AI means for work, learning and culture. Each piece is an attempt to explain some aspect of generative AI, so it’s a journey through metaphor and analogy more than anything else.1

Ok, buckle up and get ready to scroll. I've included a lot of pictures this edition for those of you who provided feedback on the last post that some more visuals would be nice.

The links

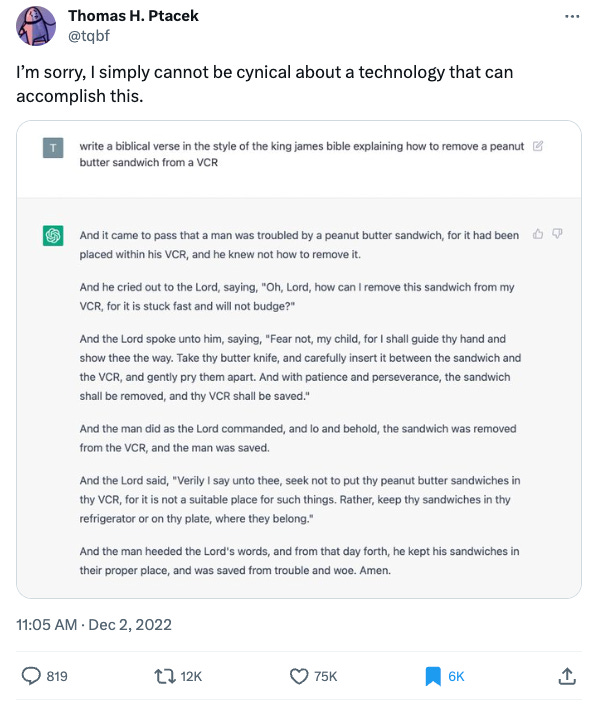

1. Peanut Butter Bible Verse

I could start with OpenAI's ChatGPT announcement post, but honestly this viral tweet by Thomas H Ptacek was what first really drove home the capabilities of this new tool for me. It's what I showed to my wife to explain why this AI stuff was a big deal. It still holds up as one of the funniest and best examples of the genre-blending capabilities of large language models (LLMs). It also highlights that a big part of the early virality of gen AI was essentially as a linguistic party trick.

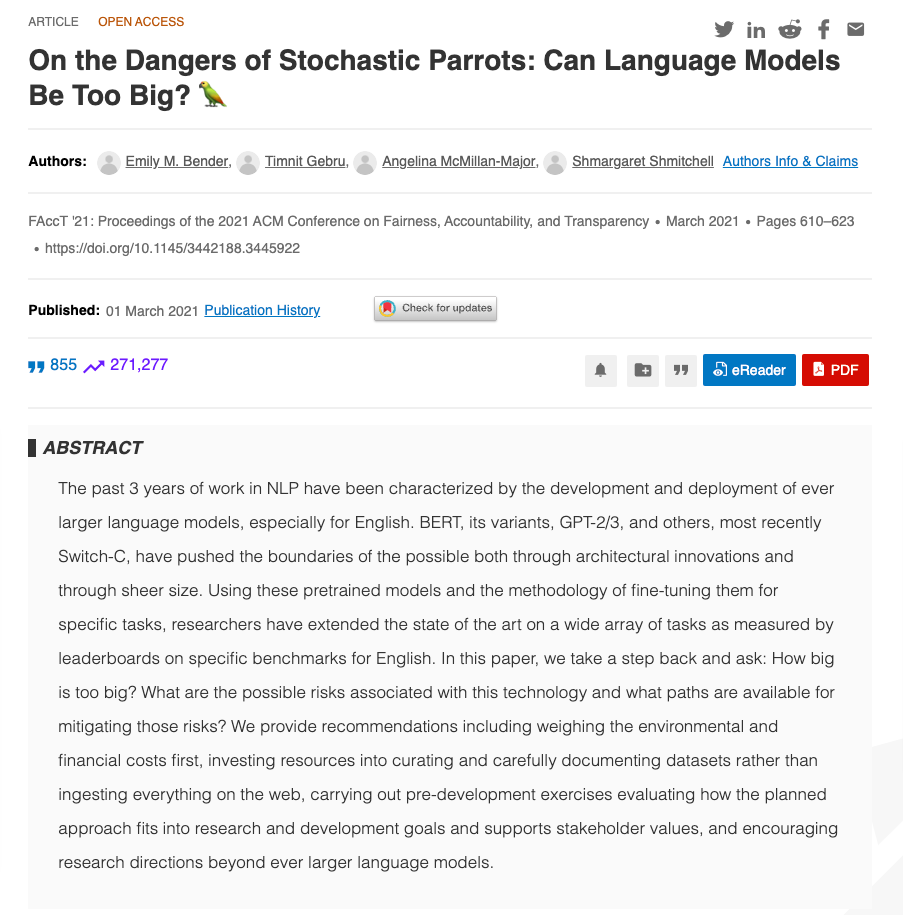

2. Stochastic Parrots

If you've heard of LLMs described as 'stochastic parrots', this is the journal article by Bender et al. (2021) that coined the term. Hard to say what's more surprising: that this was published in 2021 when the best OpenAI model was GPT-3, that publishing it seems to have gotten some of the writers fired by Google, or that they went with the obscure word 'stochastic', forcing everyone to reach for the dictionary. (Personally I would have gone with probabilistic parrots, but then I am a sucker for alliteration, and as you can see in the quote below they needed to use stochastic to avoid repetition.) The paper is actually mostly devoted to the dangers inherent in the development of very large language models - including bias, equity, and environmental impacts. A few years on, the models have only gotten bigger and these risks are still very much present. Where the parrots come in is where they argue that although the output of these models seems coherent, really it is our human tendency to ascribe meaning and intent to language, rather than any true understanding. This can be dangerous when it unwittingly propagates biases in its training data.

"Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot."

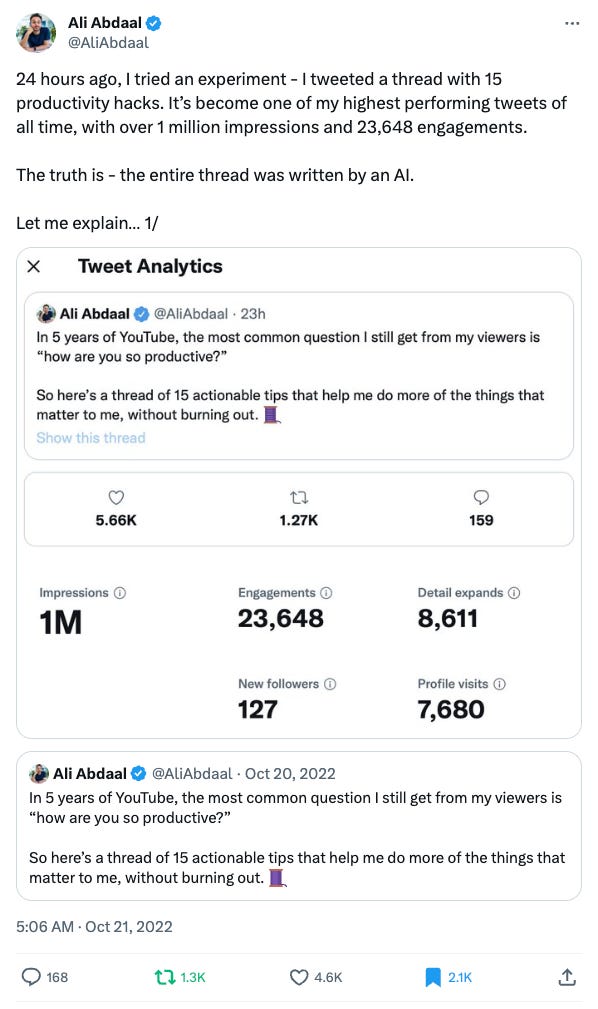

3. 15 Productivity Hacks

Ali Abdaal is one of the most famous productivity YouTubers. In late 2022, he admitted in this thread to using an early-access version of AI writing tool called Lex to produce a list of his "top 15 productivity hacks". The kicker is that this entirely AI-generated thread (published without disclosure of AI use) became his most popular ever, despite the advice being pretty generic. This was the first time I saw generative AI being used in this way. He asks:

"What does this mean for the Creator Economy? What does it mean for the Twitter threadbois like me who tweet listicles of productivity tips in the hope of growing our audience, if an AI can do a “better" job than our own brains?"

I think we can answer this to some extent now - the smart ones will use it to come up with ideas and save time producing their social media content. A question that remains open for me is, how many people are now using AI to produce online content without disclosing they are using it?2 What does that mean for our social media feeds in the long run?

4. AI Homework

Ben Thompson at Stratechery is best known for emailed essays breaking down complex tech strategy topics - in this one he tackles the impact of generative AI on homework instead (archive is paywalled, unfortunately). Ben's article explains one of the most important concepts in understanding LLMs well, which is the difference between probabilistic and deterministic responses:

"AI-generated text is something completely different; calculators are deterministic devices: if you calculate

4,839 + 3,948 - 45you get8,742, every time. ... AI output, on the other hand, is probabilistic: ChatGPT doesn’t have any internal record of right and wrong, but rather a statistical model about what bits of language go together under different contexts. ... every answer is a probabilistic result gleaned from the corpus of Internet data that makes up GPT-3; in other words, ChatGPT comes up with its best guess as to the result in 10 seconds, and that guess is so likely to be right that it feels like it is an actual computer executing the code in question."

This element of responses 'feeling' right is what makes generative AI so tricky, and the probabilistic nature is what makes working with it feel like you are rolling the dice every time - because on some level you are. Thompson also makes very lucid points about how education should respond to AI - embrace and empower rather than ban, and adapt our teaching to this new world where AI is abundant.

5. RIP College Essay

This opinion piece from Stephen Marche in the Atlantic got a lot of attention with its catchy headline. It dispatches with the theme of the headline pretty quickly - that generative AI tools can write passing college-level essays, and this spells big trouble for assessment.

The bulk of the piece, which I might point out is itself an essay, is devoted to arguing that the gulf between the humanities and sciences is increasing right at the time when humanities have a critical role to play in helping us understand and leverage the technology of AI.

6. Humans in the loop

In this piece from Benedict Evans, published in December 2022, he talks about how generative AI was having a moment. A year on it's funny to reflect on quite how much of a 'moment' its turned out to be. He explains how the probabilistic pattern generation of generative AI is fundamentally different to other AI such as machine learning models designed for pattern recognition. Echoing the ideas in the stochastic parrots article, he explains that image and text generators produce content that looks like the output it is supposed to, without an underlying mental concept of the thing it is mimicking. He asks really important questions that I still find useful today. In what task domains do we care that generative AI output is not entirely accurate? Where do we place the human in the loop when using these tools?

"generative networks, so far, rely on one side on patterns in things that people already created, and on the other on people having new ideas to type into the prompt and picking the ones that are good. So, where do you put the people, at what point of leverage, and in what domains?”

This is also the first time I saw generative AI described as an 'intern' (an idea that Ethan Mollick has also pushed):

“One of the ways I used to describe machine learning was that it gives you infinite interns. You don’t need an expert to listen to a customer service call and hear that the customer is angry and the agent is rude, just an intern, but you can’t get an intern to listen to a hundred million calls, and with machine learning you can. But the other side of this is that ML gives you not infinite interns but one intern with super-human speed and memory - one intern who can listen to a billion calls and say ‘you know, after 300m calls, I noticed a pattern you didn’t know about…’ That might be another way to look at a generative network - it’s a ten-year-old that’s read every book in the library and can repeat stuff back to you, but a little garbled and with no idea that Jonathan Swift wasn’t actually proposing, modestly, a new source of income for the poor.”

7. A view from somewhere

Erik Hoel discusses authorship and ChatGPT, and argues that what makes its output so boring is the very fact that it has no internal perspective, no view on the world.

"I could feel ChatGPT’s authorship, a sort of meticulous neutrality, dispersed throughout, even when it was told to pretend otherwise. No human is so reasonable, so un-opinionated, so godawful boring. It turns out the “view from nowhere” is pretty uninteresting. We want views from somewhere."

What this means is there might be a hard limit on AI creative abilities independent of human intervention - therefore securing the role of artists and writers in producing content people actually want to read and connect with.

8. Blurry JPEGs

Ted Chiang's description of ChatGPT as a "blurry JPEG of the web" was widely shared with good reason. This useful metaphor for understanding AI is based on the way that LLMs generate content through statistical relations in semantic space rather than direct quoting of the sources they were trained on. In this way is it similar to a lossy compression algorithm like JPEG. The original is not stored and retrieved, instead the image is reproduced from averages of surrounding pixels. For LLMs, it also produces the most statistically likely output given the starting reference points, say for example peanut butter sandwiches, the King James Bible, and VCRs.

He also talks about why he thinks it's not a good idea to use this too much for writing:

"Your first draft isn’t an unoriginal idea expressed clearly; it’s an original idea expressed poorly, and it is accompanied by your amorphous dissatisfaction, your awareness of the distance between what it says and what you want it to say. That’s what directs you during rewriting, and that’s one of the things lacking when you start with text generated by an A.I."

9. Mirror, mirror

James Vincent from the Verge argues that as we are caught up in the hype around generative AI and the compelling experience of chatting with the tools for long periods (i.e. the infamous example of the first version of Bing Chat telling NYT journalist Kevin Roose it loved him) we are in danger of attributing too much power to a flawed piece of software by mistakenly seeing our own sentience mirrored in the chatbot's LLM. The mirror test is one given to animals - do they recognise themselves in the mirror or think it's another creature entirely?

"in a time of AI hype, it’s dangerous to encourage such illusions. It benefits no one: not the people building these systems nor their end users. What we know for certain is that Bing, ChatGPT, and other language models are not sentient, and neither are they reliable sources of information. They make things up and echo the beliefs we present them with. To give them the mantle of sentience — even semi-sentience — means bestowing them with undeserved authority — over both our emotions and the facts with which we understand in the world."

10. False Promises

This NYT Opinion piece from Chomsky, Roberts and Watmull (although I've mostly seen it just described as Chomsky's) takes aim at ChatGPT, and argues that these tools are overhyped and posses vital flaws that severely limit their abilities. I have an MA in Applied Linguistics, so I was intrigued to hear what Chomsky had to say about LLMs. Essentially, he is arguing that because they learn and use language differently to how his theory of Universal Grammar predicts that humans do, they can never properly reason, and cannot be called intelligence.

"However useful these programs may be in some narrow domains (they can be helpful in computer programming, for example, or in suggesting rhymes for light verse), we know from the science of linguistics and the philosophy of knowledge that they differ profoundly from how humans reason and use language. These differences place significant limitations on what these programs can do, encoding them with ineradicable defects."

To be honest, I feel he is setting the bar pretty high - perhaps taking the 'intelligence' part of current AI at face value. But it could also be read as another reaction to humans failing the mirror test when looking at AI. I also feel like there is probably a lot of research to be done into the capabilities of language models, and we might end up learning more about how humans learn language from studying AI models.

11. Big Promises

This link is a big departure from the thoughtful, reflective pieces I've been sharing so far. Microsoft has doubled down on AI in huge way this year. It's now copilots all the way down. This online video event is very peak AI hype - and I think a bit weird. (Although the Humane AI pin launch video probably takes the trophy) The whole thing looks and sounds AI generated (maybe it was?!), but the excitement is palpable and promises are big.

As a fun drinking game suggestion, take a swig every time they say that AI will save us from drudgery. It will be four drinks, which is probably the amount you'd have to have before you think that an AI-generated powerpoint presentation is a fun idea for your daughter's high school graduation party. I'm sorry Microsoft, but no matter how much AI you stuff into the Office suite, I'm not sure you'll be able to save us from the drudgery of using Microsoft products.

12. Superhuman

Mollick describes an experiment to see how much he can achieve on a single business project in 30 minutes using AI tools. He was able to create a wide variety of materials in different media (emails, tweets, videos, websites) using only 20 prompts in several different AI tools. He wonders what the implications for society are now that people have access to these ‘superhuman’ capabilities. It's an impressive experiment that rightly made a splash on the internet.

While shows what is now possible with widely available tools, he gives himself some free passes that make it feel more like a tech demo than a real use case. This includes excluding from the time limit the often painfully slow GPT-4 response times and how long it would take to actually finalise and refine all the content for use.

13. AI Tutor

This article from the Washington Post reports on the early experiments with deploying Khan Academy's 'Khanmigo' AI chatbot tutor in a school in California. Khan Academy know what they are doing - the AI tutor is designed through careful prompt engineering to guide and help the student rather than just giving them the correct answers, and is integrated with their existing practice activities. This article describes both the promise, and the perils, of deploying chatbots in schools. No matter how good these bots get, how much time do we want our kids to be spending clicking around and interacting with computers instead of each other? (This interview with Sal Khan goes a bit more into the history and development of the chatbot, and is worth a listen.)

14. Measuring productivity

Meanwhile in productivity, by this time Twitter and other social media platforms were full of obnoxious threads along the lines of "if you're not using these 700 AI tools right now you are falling behind". The premise behind these is that ChatGPT makes a big difference to productivity, and for the kinds of people who like to point to supporting evidence, they point to research studies. That is why I read with a lot of interest this article by Baldur Bjarnason. In it, he takes great delight in dissecting a well cited piece of research that found ChatGPT boosted the speed and quality of work, along with enhanced job satisfaction to boot. He argues that proper studies of productivity are incredibly hard to do well, and that "It’s easier to prove that something conclusively harms productivity, but proving that productivity is increased because of a specific intervention is extremely difficult."

One of his main gripes with the study is that the tasks are not authentic, which affects the conclusions:

"The synthetic nature of the tasks complicates the grading. Essentially, the graders are assessing creative writing or office fan fiction and not actual work product, so they’re going to be grading fluency, not quality of work. ... Anything that primarily measures fluency, disregards hallucinations and overfitting, and is disconnected from actual work is going to favour ChatGPT results substantially."

15. Overemployed

This article from Maxwell Strachan in Vice reads more like an urban myth than anything else, but it seems like they've found real people using ChatGPT to help them work multiple online jobs at once by secretly outsourcing their work to the AI. If this isn't dystopic, at least it's very dysfunctional and unethical. (I was particularly scandalised by the teacher bragging about working on his side hustle during class.) When Mollick describes what AI can do in 30 minutes, the focus on speed of output could be seen as a playbook for this kind of approach to work. It also strikes me that online jobs where it is possible to get away with this are probably the most in danger of being automated away.

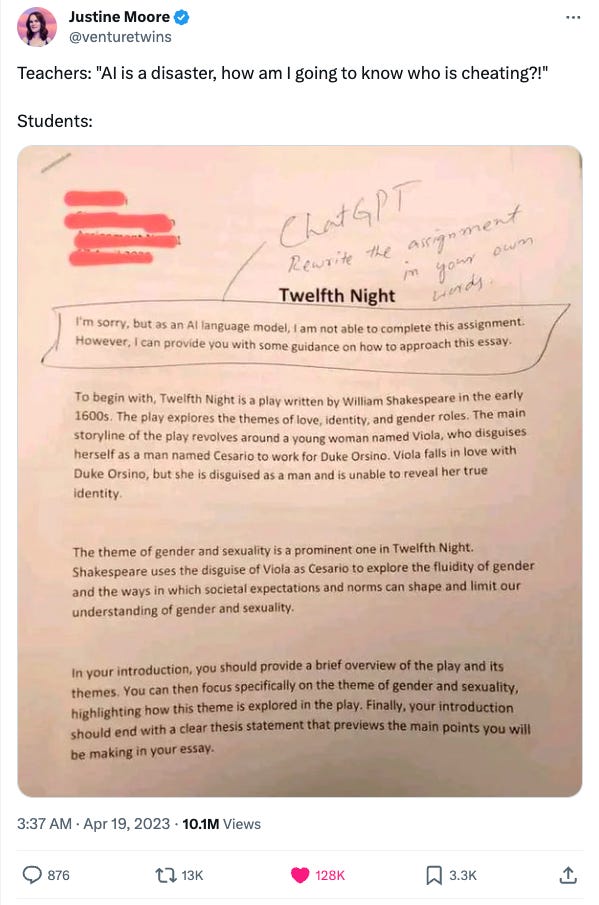

16. As an AI language model...

Speaking of trying to get away with passing off AI work as your own, I include this tweet by Justine Moore as a funny example of the risks unscrupulous users of ChatGPT take when trying to move too fast. This giveaway phrase has popped up in more than just kids’ homework.

17. Hallucinating

This critique from Naomi Klein is a lot of the reason I am undecided about whether to use AI image generators to illustrate this newsletter, and why sometimes I wonder if choosing to use LLMs at all is unethical (...before opening up 5 at once to ask them all the same question to compare outputs.) Klein argues that the term ‘hallucinations’ in generative AI should really refer to the utopian sales pitches for the many benefits of AI (*cough*, drudgery). She says these are distractions from the fact that the training of these models was only possible by a huge theft of the body of human creative output without any compensation or knowledge of the creators whose work was fed into it. She argues that the large claims made by companies for the potential benefits of AI are not based in reality. For example, the argument of AI helping solve the climate crisis. This isn’t about needing the AI to help know what to do, because we already know the solution it’s just not happening because of other structural issues in our economy, such as a reliance on fossil fuels.

"first, it’s helpful to think about the purpose the utopian hallucinations about AI are serving. What work are these benevolent stories doing in the culture as we encounter these strange new tools? Here is one hypothesis: they are the powerful and enticing cover stories for what may turn out to be the largest and most consequential theft in human history... Because what we are witnessing is the wealthiest companies in history ... unilaterally seizing the sum total of human knowledge that exists in digital, scrapable form and walling it off inside propriety products, many of which will take direct aim at the humans whose lifetime of labor trained the machines without giving permission or consent."

Of course, there are plenty of creatives taking these companies to court over the question of copyright and fair use in AI, so we'll have to see how it plays out. Personally I hope that we as a society find a way to fairly compensate artists and creators for the data they contributed to the language models...

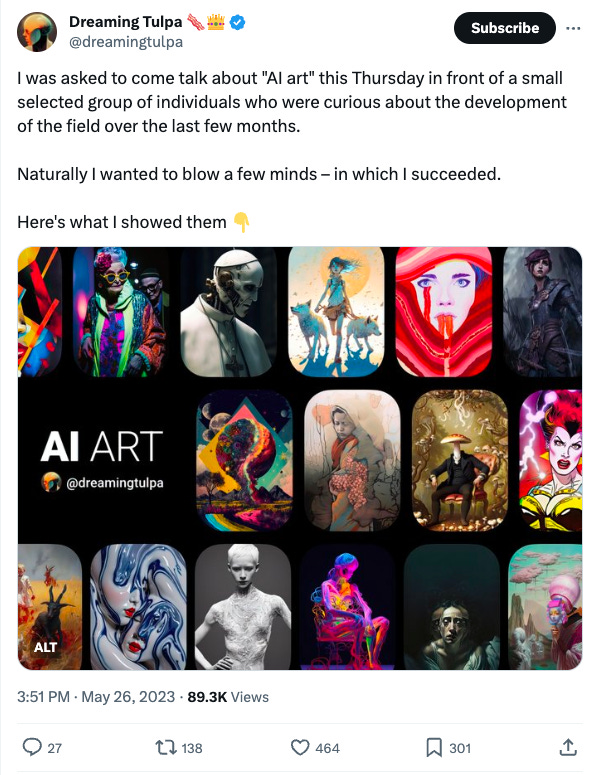

18. AI art 🤯

... because there are some truly amazing things that these models can do. This incredible tweet thread from @dreamingtulpa came out in April and since then the creative tools have only gotten more powerful with new versions of models being released. I've not seen one since that showcases quite so convincingly the wide range of capabilities of the various AI models, from text to image to brain scan to image. As they say at the end of the thread:

“This begs only one question: Wen holodeck? Joking aside, all of the above is the baddest AI (art) will ever be. Soon we might watch our own on-demand generated "Netflix" movies or visit wild west themed parks with synthetic "actors". A lot of sci-fi might soon be just... fiction"

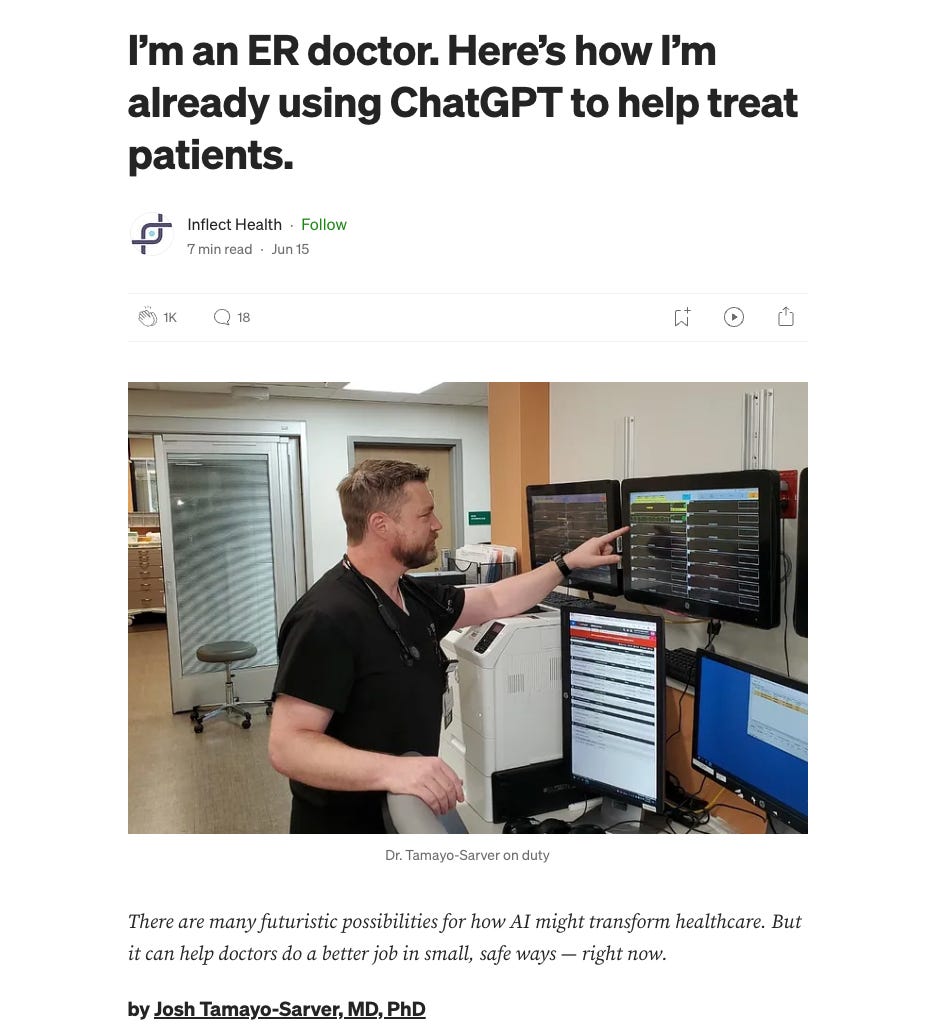

19. Doctor, doctor

In June I came across this post by Josh Tamayo-Sarver, which provides a much more down to earth, but equally powerful example of what AI is capable of. An ER doctor explains that he uses ChatGPT to support his work by generating compassionate simple to understand explanations for complex patient situations. The ER is a high pressure environment which can be life and death, and this use of AI directly addresses a key communication challenge between medical professionals and distressed people they are helping. It helps reassure patients and concerned relatives, and frees up doctors and nurses to focus on delivery of patient care. Some key things to note - the AI output still needs to be carefully checked by an expert. In this case doctors don't really want to or have time to spend time writing - the written AI output helps serve an instrumental role where generic, clear language is a help and not a hindrance.

"I am a little embarrassed to admit that I have learned better ways of explaining things to my own patients from ChatGPT’s suggested responses. But I’m also greatly appreciative for the increased human connection I feel from a patient who understands what I am doing for them, and why. ... There is a lot of hype about ChatGPT and other large language models taking away physician’s jobs because of their massive knowledge base. They won’t. But in a curious irony, my ER staff and I are able to devote far more time to the human equation of healthcare, thanks to artificial intelligence.

20. Dud?

Fast forward to August, and this piece by Gary Marcus asks the question, what if generative AI turns out to be a dud? Marcus argues that signs of declining use and lower actual revenues for genAI companies versus the multi-billion valuations could indicate that generative AI might not turn out to be the world-changing technology that it is hyped up to be. Hallucination may never be fixable, artificial general intelligence (AGI) is likely much further off than AI companies predict, and use cases may therefore be limited in a way that makes the financial value of genAI lower and therefore its societal impact lower.

Really, he is making a financial argument rather than a capability argument. As we've already seen from many links above, gen AI is certainly capable of doing quite a few things, not the least in breaking homework and many kinds of assessment. But running these models are unbelievably expensive, which is why OpenAI and Anthropic need multi-billion dollar investments from big tech companies. If generative AI turns out to just be losing money rather than ever making any, the companies may well decide to turn them off.

21. Jagged Frontiers

This is why research into the productivity impacts of generative AI is so helpful, and this working paper from the Boston Consulting Group reveals some interesting things about how AI use can impact highly skilled performers in a real world context. The headline - it seemed to give a substantial boost to the quality and speed of their work. You can read the abstract for a summary of the main findings, or Mollick's newsletter about the paper (he was one of the co-authors and I suspect the origin of the idea to use the terms Cyborg/Centaurs metaphor for how to use AI effectively).

I wonder what Baldur Bjarnason makes of this paper, but from a methodological standpoint it seems pretty rigorous to me. They put a lot of effort into experimental design and making the tasks as authentic as possible.

Although the study found advantages for use of AI overall, there are some worrying implications in the details. When participants used AI for the task that was designed to be outside the LLM's capabilities, it actually had a negative impact on quality. Those who blindly accepted the AI output without properly checking it would have been better off not using it at all. In the other task, AI boosted their creativity but also the sameness of their ideas. As the paper argues, another danger is that it is very hard to determine what is within the 'jagged frontier' of AI capability:

"Our results demonstrate that AI capabilities cover an expanding, but uneven, set of knowledge work we call a "jagged technological frontier.” Within this growing frontier, AI can complement or even displace human work; outside of the frontier, AI output is inaccurate, less useful, and degrades human performance. However, because the capabilities of AI are rapidly evolving and poorly understood, it can be hard for professionals to grasp exactly what the boundary of this frontier might be. We find that professionals who skillfully navigate this frontier gain large productivity benefits when working with the AI, while AI can actually decrease performance when used for work outside of the frontier."

22. Little Language Model

I can't think of a better way to finish this list of links than this beautifully illustrated and meticulously researched visual essay by Angie Wang in the New Yorker. In it, she grapples with many of the issues covered in this post, while reflecting on the developing language of her toddler and his future in an AI world. As I mentioned at the top, following the news and discourse around AI this year has felt overwhelming and dizzying. Wang has captured this feeling perfectly, without losing sight of the most important thing to keep in mind when discussing technology - human connection.

So where are we now?

Writing to you on the last day of 2024, that feeling of dizzying change is no longer present. A lot of the initial hype and worry over AI has faded. More and more people are engaging with it and it is becoming more normalised.

The people in this piece have reached for different metaphors and analogies to make sense of generative AI - parrot, intern, tutor, mirror, blurry JPEG, cyborg - and with each attempt at explanation its impact on our culture and society comes a bit more into focus. No doubt next year we'll see still more as we continue to grapple intellectually with an impressive but imperfect new technology that touches on fundamental things like human expression, creativity, and what it means to know and understand.

I feel that the extent to which generative AI will truly disrupt knowledge work in the long term is still an open question. ChatGPT and other AI tools are clearly very capable, but controlled research studies and tech demos are not the same as the messy work of actually using them in your job. The results of my experiments in my own work so far are pretty mixed. As we've seen in this recap, LLMs’ probabilistic nature can make them mercurial, unreliable and tricky to work with.

Regardless, right now it seems like anyone who spends any amount of time working or learning on a computer is potentially impacted. It's critical to develop and understanding of how they work and what that means for where they can be a help and where they might be a hindrance. One part of that is by reading and listening to smart people like those in this post, but that will only get you so far.

The main thing is to get hands on experience and try using it on genuine tasks of value to you, and then tell people what happened. I believe this is one of the best ways to discern true capabilities from the hype, and to contribute to the collective efforts to figure out how to best adapt education and work to these capabilities.

This is why I'll be doing exactly this in Tachyon next year. I'll be back at the end of January to report on my experiments in using ChatGPT for language practice.

What did I miss?

I'd love to hear what links you would include in your own review of the past year of making sense of AI. Let me know by replying to this email or leaving a comment on the Substack post (if you have a free account with Substack).

Sources I follow to understand AI

Here are some great channels I've been following this year that have helped me make sense of AI and keep up to date with news and developments. They are ranked from most accessible to most technical, depending on your appetite for the details. You'll recognise many of the names from the links above.

Hard Fork - this podcast from Kevin Roose and Casey Newton is fun, informative, and most importantly, edited to a reasonable length (under 1 hour each episode). They cover tech news, not just AI, but of course tech news has mostly been AI this year.

One Useful Thing - Ethan Mollick's weekly newsletter has spiked in popularity since he pivoted to exclusively covering AI over the past year. He has an incredible ability to come up with creative and interesting uses for large language models, and to provide novel ways of thinking about the technology and its impact on learning and productivity.

The Algorithm - This weekly MIT Technology Review newsletter about AI written by Melissa Heikkilä is one of my favourites. Short, sharp, and balanced coverage of the news relating to developments in AI. I love how she deftly unpacks technical news and doesn't shy away from reporting on the critical views too.

Marcus on AI - Gary Marcus loves to rain on the AI hype parade in his newsletter. It mostly consists of short, critical responses to the latest news in AI. As a longstanding expert in the field, he is well placed to provide a healthy dose of scepticism regarding the latest claims from AI boosters.

Import AI - Jack Clark is one of the co-founders of Anthropic, and his weekly newsletter breaks down the details of the latest advances in generative AI along and why they matter. He closes most newsletters with a mini sci-fi story, which is a fun idea. I do find the "things that inspired this story" a bit annoying, but that's probably the literature major in me.

The end

Thanks for reading. This turned out to be a long one! I promise the next one will be shorter.

I hope you have a happy new year. See you in 2024. 🎉

Antony :)

P.S. This is what I'm reading at the moment, the Cyberiad by Stanislaw Lem. I picked this up in a charity bookshop on my trip to New York in October.

I can best describe it as a collection of short stories about two genius robot inventors called 'constructors' going on medieval quests and getting up to all sorts of mischief. Lem is creative, playful and satirical (although the jokes about characters getting beaten up don't age that well).

What surprised me is how this book published in the 60s resonates so much with our current moment. For one thing, all the characters are 'cybernetic' (i.e. robots) in a medieval universe full of human foibles and emotion - including a lot of hyping up of the new inventions they create. There is no clear line between human and machine. Has technology advanced to such a state that we have been replaced, or merged with it?

Some of the stories explore questions we are asking today. If we (or they) become sufficiently advanced, will we invent machines that can create anything starting with the letter N on demand, which includes true Nothingness and therefore nearly destroy the world? If we want an artificial model that can create truly original content do we need it to internally recreate the development of the entirety of human history starting with the creation of the universe from scratch? If this works and we have an AI model that can write original content, will we decide to blast it off into space to get rid of it?

Just goes to show that the humanities (and sci fi in particular) do offer a fun way of exploring fundamental questions and considering the unintended consequences of technology.

A few disclaimers and caveats about the links in this list:

The selection of pieces is highly subjective, and probably include all kinds of biases. For example, there is more discussion of the models that output text than other mediums such as images, video or music. I also haven't really touched on important issues like security or privacy.

This isn't an attempt to write a recap of the trends in business, education, or technology - great examples of these already exist. This is more about metaphor and analogies than anything else.

The list is presented in roughly chronological order, although I didn't always read them in order of publication so I've taken a few liberties with sequence.

In each link, for reasons of space I'll be necessarily cherry picking only certain parts, and missing a lot of detail and nuance. I recommend following the links to read in full any post that piques your interest.

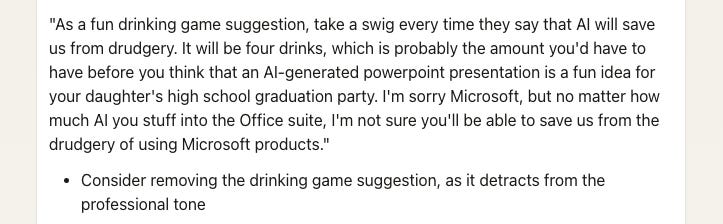

I should take this opportunity to state for the record that this piece was written the old fashioned way. I only used AI (Claude) at the final step to help proofread and act as a copy-editor. It helped catch some mistakes (I’m sure it missed some) and I accepted a couple of rewording suggestions, but I mostly ignored the feedback - for example this one 😂:

Ending the year with a banger with this one, Antony!

This was a great conversational approach to presenting a really holistic, extensive and well rounded capture of what AI is and does.

I'd recommend or forward this on to anyone who has any questions around AI, how it benefits and propels, what the use cases may be and what it means for us going forward. Quite timely going into 2024 as the interest trajectory will continue to escalate!

BTW the addition of images was fantastic, they gave me more context at each point. For example at point 18, "AI Art" can potentially mean different things to different people, but when you show an image, it helps me to quickly understand exactly what you're referencing!

Can't wait for more in '24!