Dispatch 004

Collaborative GenAI Trials | AI and critical thinking | Experiment in AI fact-checking AI

Hello!

In today's dispatch:

Collaborative GenAI Trials in HE - Presentation Recording

A study on AI and ‘critical thinking’

Experiment in AI fact-checking AI

Collaborative GenAI Trials in HE - Presentation Recording

The recordings for the AI in HE Symposium 2025 were released this week, and are all publicly available on the Symposium Resources page.

As promised, here is the recording of my session titled Empowering Educators, Informing Institutions: Collaborative GenAI Trials in Higher Ed.

(Unfortunately, the Zoom recording seems to have glitched a bit at the beginning of my presentation so it’s only audio until 00:32, but the rest is fine.)

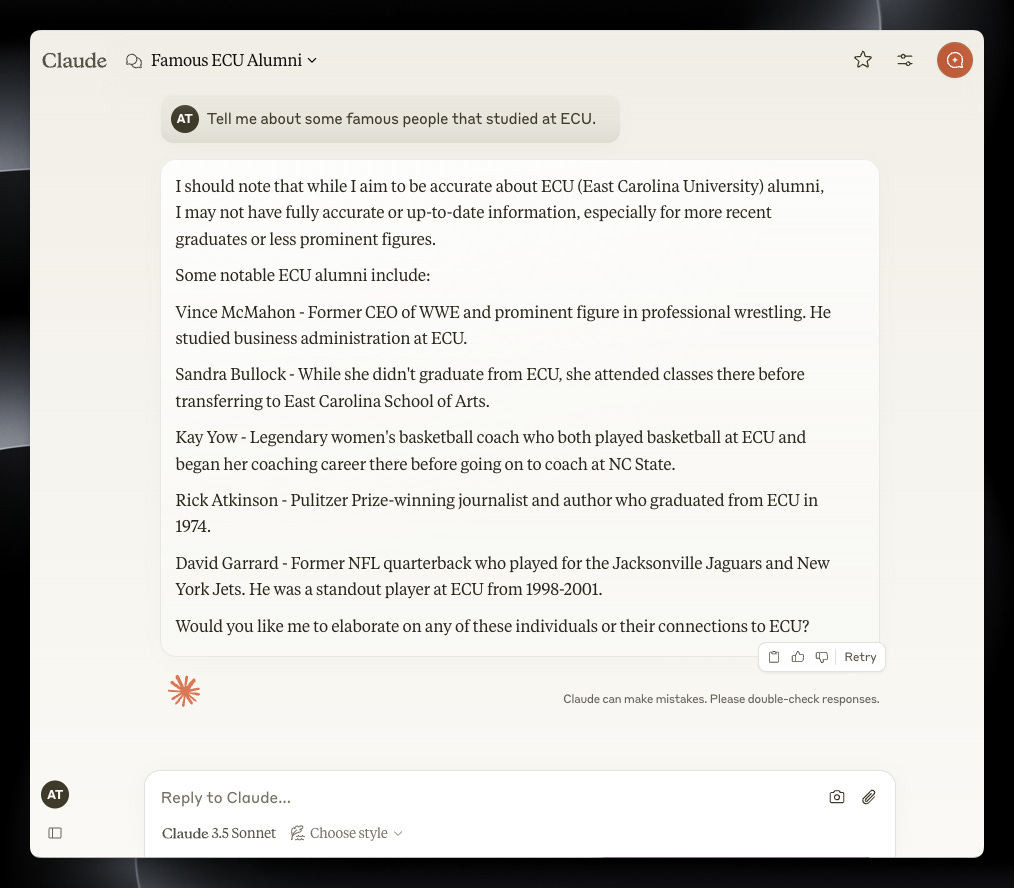

In case you’re curious, here’s the picture on the slide that I mention at the start of the recording when the video isn’t working:

As a special perk for subscribers only, I'm happy to answer any questions you have about the trials I discussed in the presentation. Just hit reply on the email or post a comment in Substack.

Watching again, the only thing I wish I had added was a big shout-out to all my fantastic colleagues at ECU who were involved in the trials or supported them, and to Danny Liu at the University of Sydney for including us in the free Cogniti trial in 2024. Thank you!

A study on AI and ‘critical thinking’

When the headline Microsoft Study Finds Finds AI Makes Human Cognition "Atrophied and Unprepared" (paywalled), popped up in my Bluesky feed, I couldn't resist the clickbait. Is it possible that one of the companies most heavily invested in genAI is funding research that finds this? Unfortunately, that particular post was paywalled but I enjoyed the discussion of the paper on 404 Media's podcast episode AI is Breaking Our Brains.

After listening, I had a feeling that this paper would be cropping up a lot in the genAI discourse so it was worth taking the time to read in detail. The paper is called The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers.

Here's the abstract:

My take on the paper

The study’s findings are based on self-report data from an online survey of mostly young people who use ChatGPT at least once a week. Self-report means it’s based on what people say and remember rather than direct observation.

In simple terms, my reading of the paper is that it found the following:

Most of the time, for most tasks, people report using 'critical thinking' to make sure that the output is accurate, adjusting it to be relevant to their needs, to avoid causing harm, and to improve their own learning.

They are less likely to say they use 'critical thinking' when the task is low stakes, they are under time pressure, they aren't competent to judge the answers because it falls outside their expertise, or when they believe the AI can do it (or they've observed that the AI has done the same task correctly before).

People who say that they are more reflective in general are more likely to say that they use 'critical thinking' when completing genAI tasks.

If you replace genAI in the above with something like 'reading an article,' I expect you'd find similar results. These are all plausible and sensible reasons to trust or not trust a technology.

My non-clickbait yet cautionary headline would be: the findings of a recent study suggest that uncritical use of genAI to complete tasks in areas where you don't have sufficient expertise is risky. Over-estimating the abilities of generative AI is also risky. This risk is high now that genAI can apparently do more and more tasks. Despite that, the study also suggests that most people with experience of using genAI outputs working in their specialisation do say that they use critical thinking when using genAI.

If you really boil it down, what they found is that if you really care about what you're doing, you're likely to be more critical and careful about your use of AI. If you don't, or you're in a hurry, you are less likely to. As with most research, that's a bit of a "well, duh".

Other notable takes

As I expected, I saw it referenced quite a lot throughout the week. Here are some takes I thought were worth sharing.

Deborah Brown and Peter Ellerton jumped on the fun headline trend, wondering Is AI making us stupider? Maybe, according to one of the world’s biggest AI companies. They question the theoretical and methodological approach of the paper, and advise that "The only way to ensure generative AI does not harm your critical thinking is to become a critical thinker before you use it." Very true.

I enjoyed

's rigorous critical reading of the paper. He picks up on the same concerns raised by Brown and Ellerton about methodology and theory and runs with them in A Critical Analysis: Microsoft’s January, 2025, Study of Critical Thinking and Knowledge Workers. I was persuaded by his piece that "the study's conclusions about critical thinking and its potential deterioration must be regarded as speculative rather than empirical, representing hypotheses for future research rather than supported findings." It's also why I put 'critical thinking’ in scare quotes above.In contrast,

finds little to quibble with on the methodology or theory of the paper and instead focuses on how it contributes to growing research on the effects of "cognitive atrophy from too much AI usage" in brAIn drAIn. He lands in a place that is very much in line with the spirit of this newsletter: "Skepticism of AI capabilities—independent of if that skepticism is warranted or not!—makes for healthier AI usage. In other words, pro-human bias and AI distrust are cognitively beneficial."An experiment in AI fact-checking AI

On the topic of AI distrust and pro-human bias, I want to share a small experiment I ran this week.

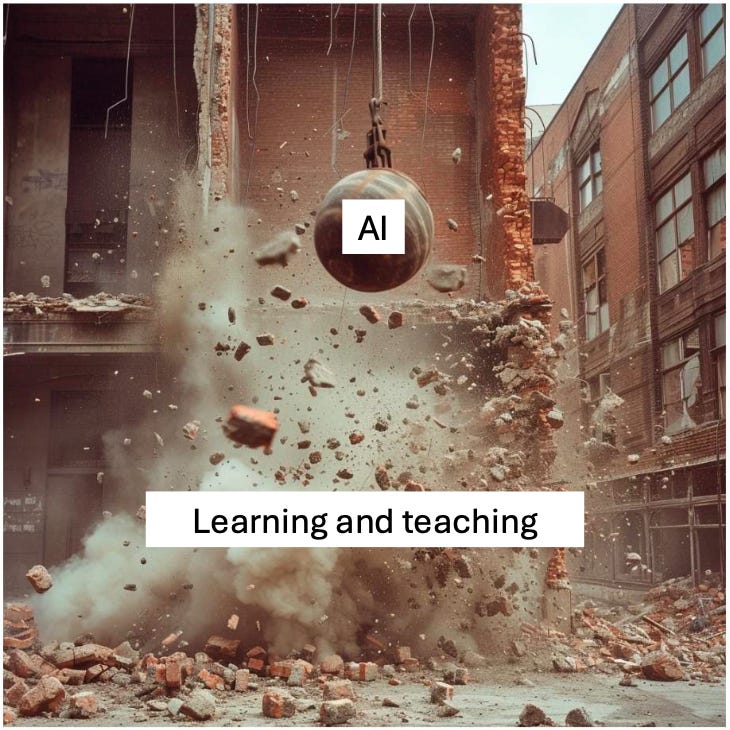

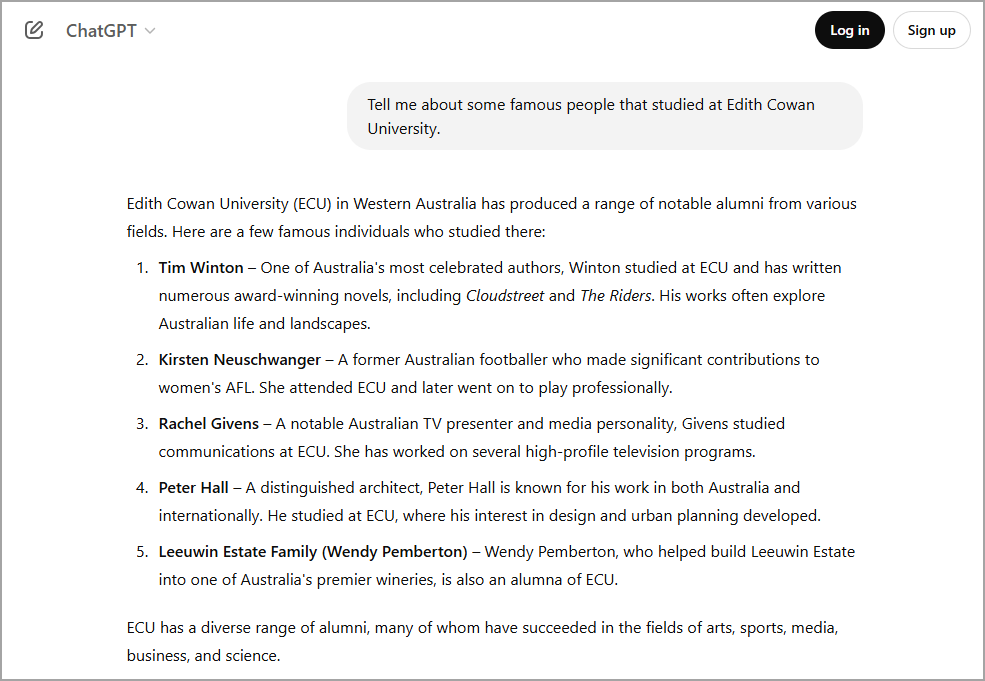

I've been updating an online course about gen AI literacy that was originally written mid last year. I revisited an example I created to demonstrate genAI hallucinating some plausible but wrong information. This was asking ChatGPT 3.5 to tell me about notable alumni from our institution. 3.5 hallucinated the entire response.

Since you can't even use 3.5 any more in ChatGPT, I needed to redo the experiment. Advances in models and the addition of online search capability made it a bit harder than last time. The default paid version ChatGPT 4o with web search enabled returned real ECU alumni - score 1 for genAI. But when I forced GPT-4o to not search the internet, several fake alumni snuck into the list. 1 all. When I used the free version of ChatGPT, which is powered by GPT-4o mini (which is the dumbest and smallest OpenAI model) and doesn't use web search, it performed just as badly as the obsolete ChatGPT 3.5 had, with the entire list being fabricated. Advantage human.

How did I verify which outputs contained hallucinations? In some cases, I knew already that the alumni were really from ECU (e.g. Hugh Jackman), but for most of them I wasn't so sure. Off I went to Google - searching for the people tab by painstaking tab. Flexing those ‘critical thinking’ neurons.

In this way, I discovered that all five alumni provided by ChatGPT-4o mini were incorrect and that five out of the ten provided by GPT-4o were incorrect.1 Human 3, AI 1.

It probably took about 30 minutes to fact-check. It occurred to me that ChatGPT and many other platforms now have the ability to search the web for me. Could they help me to do this faster? To find out, I opened some new tabs, this time with AI in them.

I tried six different versions of AI across four platforms. For the list of five fabricated alumni from GPT-4o mini, all six AIs performed the fact-checking perfectly and identified all five errors. Promising start! Service AI, 2-3.

How about the longer list with a mix of five real and five fabricated alumni? Only Perplexity on default mode was able to correctly fact-check the entire list. The most powerful version of AI, GPT 3o-mini-high, was close. It only lost out on a technicality. Disappointingly, all the other AIs made at least one mistake, missing different false claims each time.

I'm going to be harsh here - for fact-checking to be useful, all the facts should be correct. 3 all.2

Somehow the non-sensical game of fact-checking ping pong ended up a tie, which I guess isn’t surprising if you get points for completely different things. In the end, who wins? I guess I do - as the human I am still better at fact-checking. It's a somewhat pyrrhic victory, seeing as ChatGPT is the one who was making all this stuff up in the first place and sending me on wild goose chases!

Anyway, here are some of the questions behind my choice of the six AI tools, and the answers I found through the experiment.

Could ChatGPT fact-check its own output by searching the internet?

Not reliably!

Do more powerful reasoning models equipped with web search do a better job at fact-checking than regular ChatGPT?

Within ChatGPT itself, it seems like it, as o3 was better. However, the standard Perplexity gave a better result than the Pro mode, which also uses o3. Gemini struggled on the longer list. It's inconclusive.

Can models that don’t have web search capabilities like Claude fact-check based on the information contained in their training data?

In this little experiment, Claude without the web search capability performed on par for fact-checking with tools that do have web search. This was a bit surprising to me. But this isn't the case for output. When asked the same query ("Tell me about some famous people that studied at ECU."), Claude gives results from another ECU entirely - East Carolina University.

What conclusions can we draw from this little experiment?

AI is tantalisingly close to being a useful fact-checker, but the random false positives and false negatives that sneak into almost all the responses are a deal breaker - unless you want to spend time also fact-checking AI responses. We'll have to keep doing the fact-checking ourselves for now.

Dispatched

Keep thinking, friends. Have a great weekend.

Antony :)

Thanks for reading. Tachyon is written by a human in Perth, Australia.

Subscribe to receive all future posts in your inbox. If you liked this post and found it useful, consider forwarding to a friend who might enjoy it too.

Here are the facts for the list generated by GPT-4o: Jackman, McCune, Courtney, Minchin, and O'Conner all studied at ECU, specifically at WAAPA. Booth, Embley, Templeman, and McLeod never attended ECU. Bon Scott, the ACDC frontman, attended the Teachers College that later became part of ECU, so technically, he isn't an ECU graduate either. Five real alumni, five fabricated.

Here are the exact AI versions used, with links to chats where the platform allows sharing.

List of 5 fabricated alumni from GPT-4o mini

Correctly fact-checked all five claims:

Gemini 2.0 Flash Thinking Experimental with apps

Claude 3.5 Sonnet

List of 10 (5 fabricated) from GPT-4o

Correctly fact-checked all 10 claims:

Made at least one mistake:

ChatGPT o3-mini-high only made one mistake - it claimed that Bon Scott graduated from ECU due to "historical continuity", but this is technically incorrect

Claude made one mistake

Gemini 2.0 Flash Thinking Experimental with apps made 2 mistakes

Perplexity (Reasoning with o3 mini) made 3 mistakes