Hello from the end of what was a pretty hectic week. 😅

In this week's dispatch:

Highlights from my WATLF Workshop

Presenting at the AI in HE Symposium

Highlights from my WATLF Workshop

At the WATLF on Thursday I facilitated a 90-minute workshop about making sense of and trying out some of the biggest developments in genAI since ChatGPT came out in 2022.

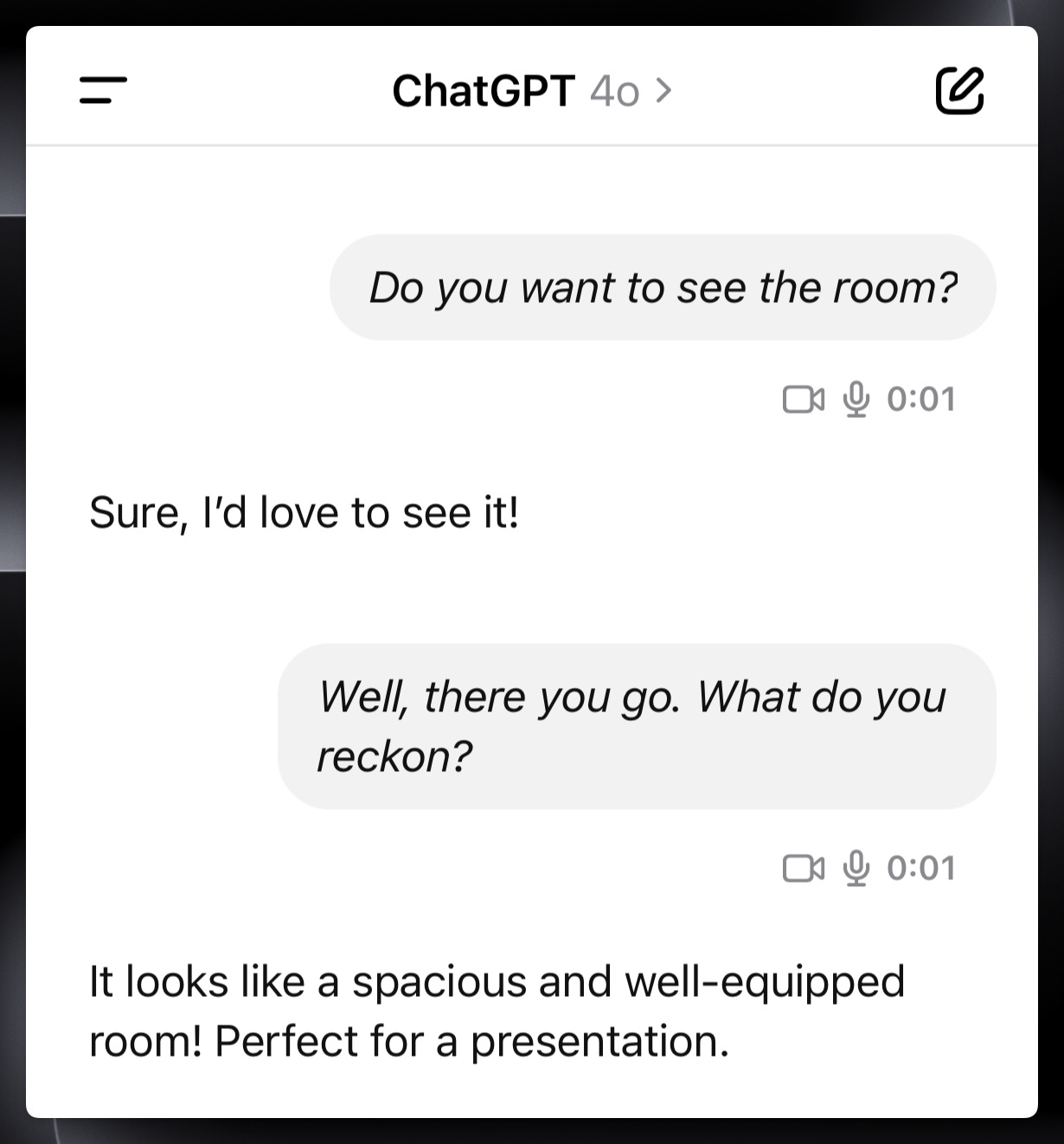

While setting up the demos for the workshop I did a test of ChatGPT Voice with vision on my phone, showing the AI the empty teaching space via the camera. I didn’t record a video of the interaction but you can see from the chat transcript below the rather amusing advice it gave me after I told it I was giving a presentation at a conference. After suggesting I add a bit more of a party vibe to the room it also approached the problem of not having any audience with cheerful mechanical optimism.

Thankfully I didn't have to take the AI’s advice to just imagine my audience because about 45 people attended the actual workshop.

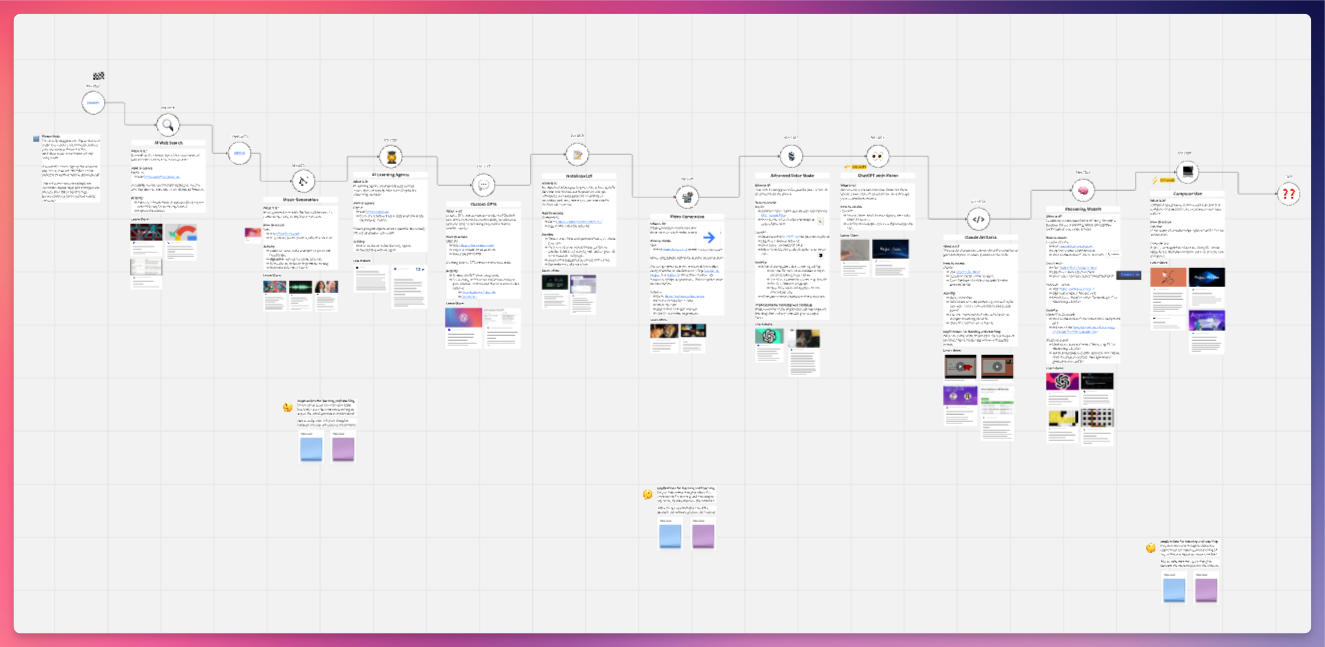

The main activity was an interactive timeline of my rather biased curation of the most significant developments in genAI, which I created in Miro.1

This timeline is live on the web and anyone with the link in the button below can access it. Feel free to explore the board for yourself and share it with anyone who would be interested.

How to use it

You don’t need to sign up for an account with Miro to access the board, just open the link and dismiss any log-in or sign-up prompts.

Pan around to explore the timeline. Zoom in and out using the buttons in the bottom left, or by holding CMD/CTRL and using the scroll wheel.

For each development, there is a brief explanation followed by instructions on how to access it and then a suggested task.

Underneath are some hand-picked articles or videos where you can learn more.

If you just want to dip your toes in, I would recommend trying ChatGPT Voice mode or NotebookLM. If you work in education I'd also say it's worth taking a look at the learning agents.

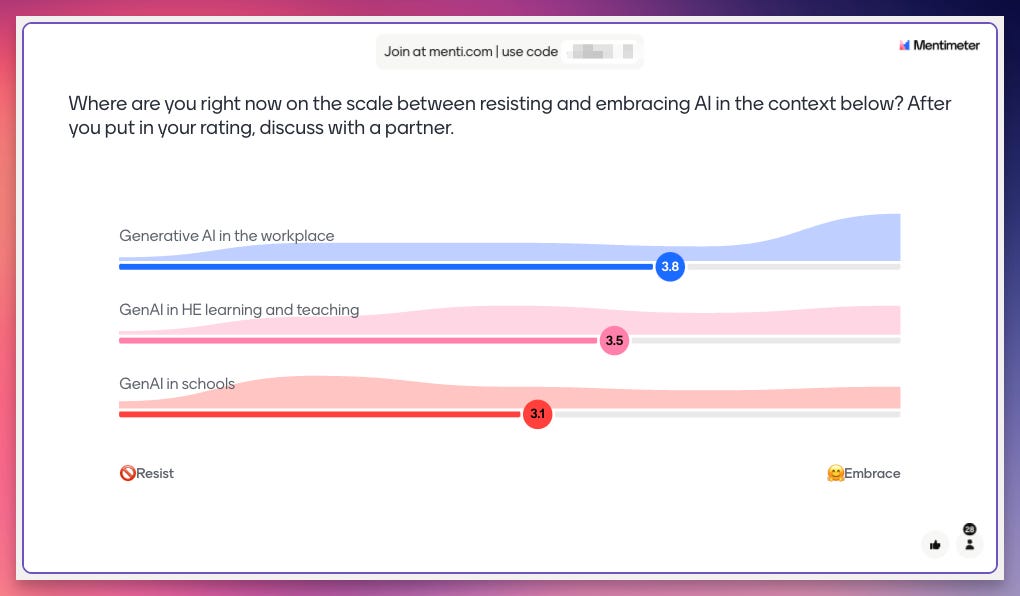

We finished with a discussion and some questions in a Mentimeter poll. Perhaps unsurprisingly for an audience that was interested in AI, most people tended towards the 'embrace' end of the scale, in particular for their own work. When it comes to our kids’ use of AI at school, though, it seems not so much.

How about you?

There were many excellent responses to the 'hot takes' final question slide (screenshot below), which are an insightful snapshot into the concerns and approaches of academics and professional staff working in higher education, at least those working in WA. I could spend a whole post unpacking the different themes in there, but instead I'll let you have a quick read for yourself.

Do you have a hot take on genAI? I'd love to hear it. Just reply to this email or post a comment on the post in the Substack app or online.

Sadly I wasn't able to attend any further sessions at WATLF as I had to head to the airport to make it to Sydney in time to get a night's sleep…

Interlude

Presenting at the AI in HE Symposium

The AI in HE Symposium was hosted by the University of Sydney. This event had almost 3000 registrations online and face-to-face, and there were three parallel streams throughout the day on topics like assessment, AI literacy, AI simulation and scenarios, critical thinking, and engaging students with AI.

The event was well organised and the calibre of the sessions was overall really high. The energy did dip and the crowds thinned a little as the day went on, but that is understandable considering the relentless pace of the 12-minute presentation format. I still need to catch up on the recordings of several presentations that I missed to get a fuller picture, but overall I appreciated the focus on practice. I also really valued the chance to connect with old friends and colleagues as well as make new connections.

I presented fairly early on in the faculty engagement and leadership stream, and like everyone else only had twelve minutes to talk. I had about 40 people in the room and I’m not sure how many online. I won't say more about my session as I hope to be able to share the full video here once the organisers release the recordings.

Dispatched

Ok, I need to put my feet up and catch my breath. That's it for this week. Have a good one.

Antony :)

Thanks for reading. Tachyon is written by a human in Perth, Australia.

Subscribe to receive all future posts in your inbox. If you liked this post and found it useful, consider forwarding to a friend who might enjoy it too.

PS

What I'm watching

My wife and I have started watching Season 2 of Severance. It's great to see that this kind of cryptic high-concept sci-fi show is so popular and still going strong. The cinematography, sets and performances are on point - they give it such a cold, clinical and sinister vibe that is perfect for the storyline.

In case you missed it, there was also a fun promotional event for Severance in New York, a live performance with the main characters in a small recreation of the office set. This post on MacWorld has some pictures that give a good sense of what it was like to be there. I also enjoyed the Verge’s piece with “Adam Scott on using Severance’s weird, retrofuturistic computers”.

Here’s the fine print on the development of the timeline:

Because the timeline was already overwhelming, and I wanted to focus on the most significant developments for learning and teaching, there were many things that I chose not to include. For example, I didn't talk about the open-source release of Llama, the ability to run local models on your consumer laptop, the disturbing trend in genAI being used to create plausible but non-existent synthetic humans, or to deepfake specific people with increasing realism.

I've taken some liberties with the exact dates, because some features are announced long before they come out (e.g. Sora), or there are different versions of the same functionality that have come out at different times (e.g. o1 Preview versus DeepSeek R1.)