Dispatch 001

A new format for a new year

This year I'm going to change gear to keep pace with the rapid changes in technology and start posting more frequent updates. I'll call these 'Dispatches' to differentiate them from longer periodic deep-dive essays and experiments.

It's been just over a year since I launched Tachyon and in that time I've published some very long posts that I've enjoyed writing and I hope that you have enjoyed reading. But the reality is that producing posts like the ones I wrote last year each take on average of 24 hours to research, run the experiments and write. This means I have published only a fraction of the things I wanted to. They are also longggg which means more of your time to read them.

In the the meantime, mostly spurred by genAI, the number of hyped shiny new things with potentially transformative effects on productivity and learning has increased exponentially. Every week I've been trying and testing things out but it's simply not possible to give each of these such deep treatment.

That's why I've decided to start writing a new series of weekly updates that I'm going to call 'Dispatches'. These shorter posts will report back on whatever I've been exploring on the frontier of effective uses of technology for learning and productivity that week. A virtual smorgasbord, if you will. Experiments with new tools and features, online discourse, what I'm thinking about, what I'm up to, that sort of thing. Inevitably, a lot will be about AI, but not exclusively.

Please bear with me as I work the best shape and style for these dispatches, and forgive me for any rough edges as in order to get them out frequently these posts won't be as polished as what I've shared so far.

In this dispatch:

Getting ready for two presentations next week

Voice mode on the road

ChatGPT Tasks for daily language exercises

DeepSeek freak out

I'm presenting at two different events next week

At the end of last year in delirious fit of enthusiasm for filling future Antony's calendar I decided to submit a bunch of conference proposals for 2025. I'm fortunate that all three so far have been accepted - at WATLF, 2025 AI in Higher Education Symposium, and THETA. I'm not so fortunate in that the first two both happen to be taking place at the end of next week.

On Thursday I'll be facilitating a 90-minute workshop at the WA Teaching and Learning Forum called “What’s new, ChatGPT?” Exploring the most significant recent advances in genAI and what they mean for education. From my proposal:

Keeping up with developments in generative AI has become almost a full-time job, and even for those following closely it is challenging to understand the significant developments models and tools amongst the relentless product releases and attendant hype. For staff, this change can lead to confusion, anxiety and AI fatigue, negatively impacting their ability to engage ethically and effectively with AI and support their students to do the same.

The aim of this workshop is to give participants a chance to take a step back, understand and engage with the most significant advances in genAI capability since ChatGPT debuted at the end of 2022. Participants will actively engage with AI tools and capabilities through live demos and hands-on activities, improving their AI literacy and getting up to speed with the most significant developments. Finally, they will have an opportunity to reflect and discuss the significance and impact of these developments on their disciplines and teaching practice, in a supportive and collaborative environment.

I'm having a lot of fun preparing this workshop, which will be as interactive as I can make it with a range of genAI features and platforms for participants to try out for free. I don't think the session will be recorded but there will be a Miro board with most of the content which I will share here for your edification and amusement.

Then on Friday I'll be doing a literal flying visit to Sydney to present at the AI in HE Symposium at the University of Sydney. I'll have 12 minutes to talk about some of the genAI trials I helped facilitate at work last year - including Midjourney, custom GPTs and Cogniti. I got ethics approval for the Cogniti trial, so I'm excited to be able to share the data from that publicly. This Symposium is going to be big, with 2500+ people registered and some really interesting presentation topics.

Online registrations are still open and I'll be sharing the video here once it's available in case you want to watch it.

I'll be posting live from both events on Bluesky so if you're interested join me there.

Voice mode on the road

In preparation for the WATLF workshop I've been revisiting a bunch of genAI features and platforms to evaluate the current state of play. One of these is the more advanced voice modes. I have a long commute so there is plenty of time to listen to podcasts, record rambling voice notes, and talk to AIs. I've already spoken extensively with ChatGPT's paid-tier Advanced Voice mode, but I wanted to see which voice mode was the best for free for workshop participants to try.

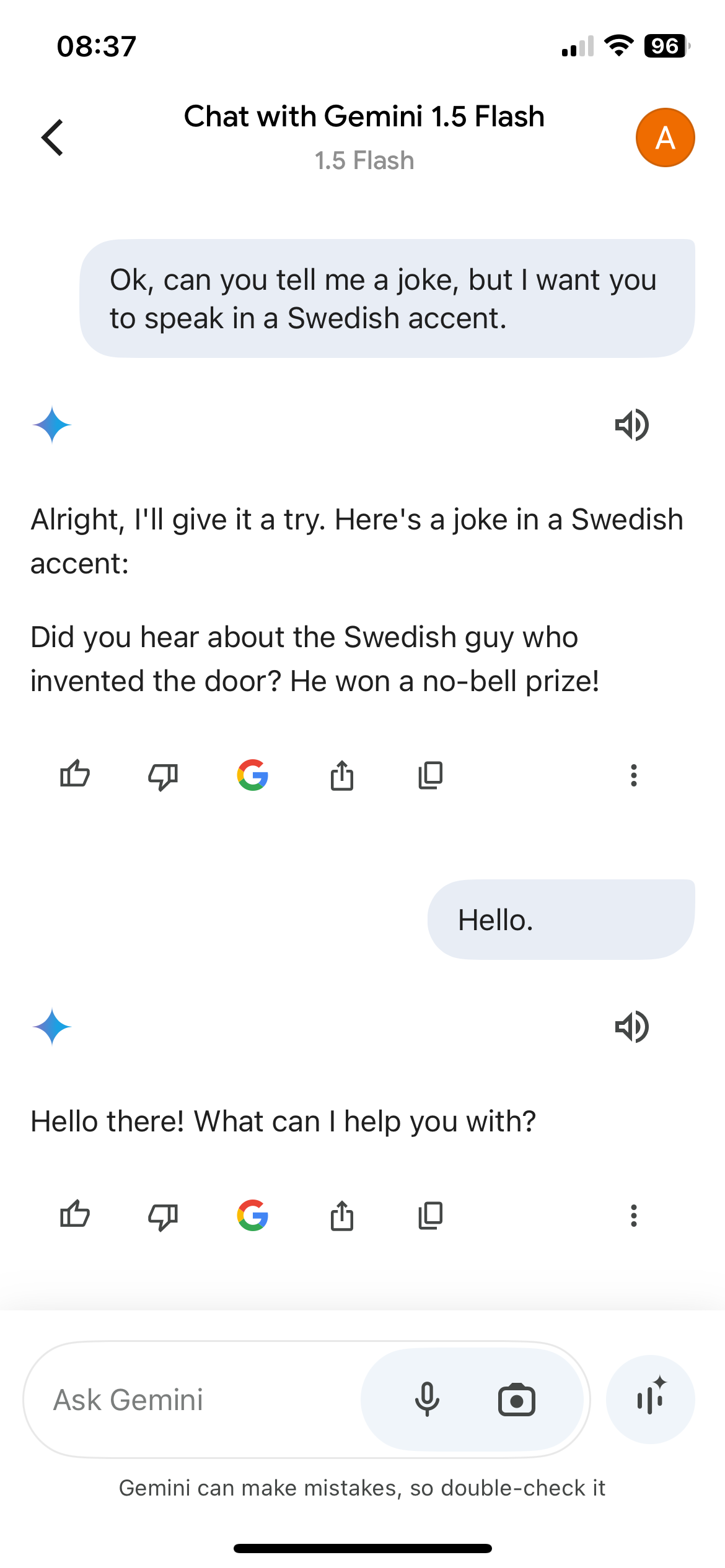

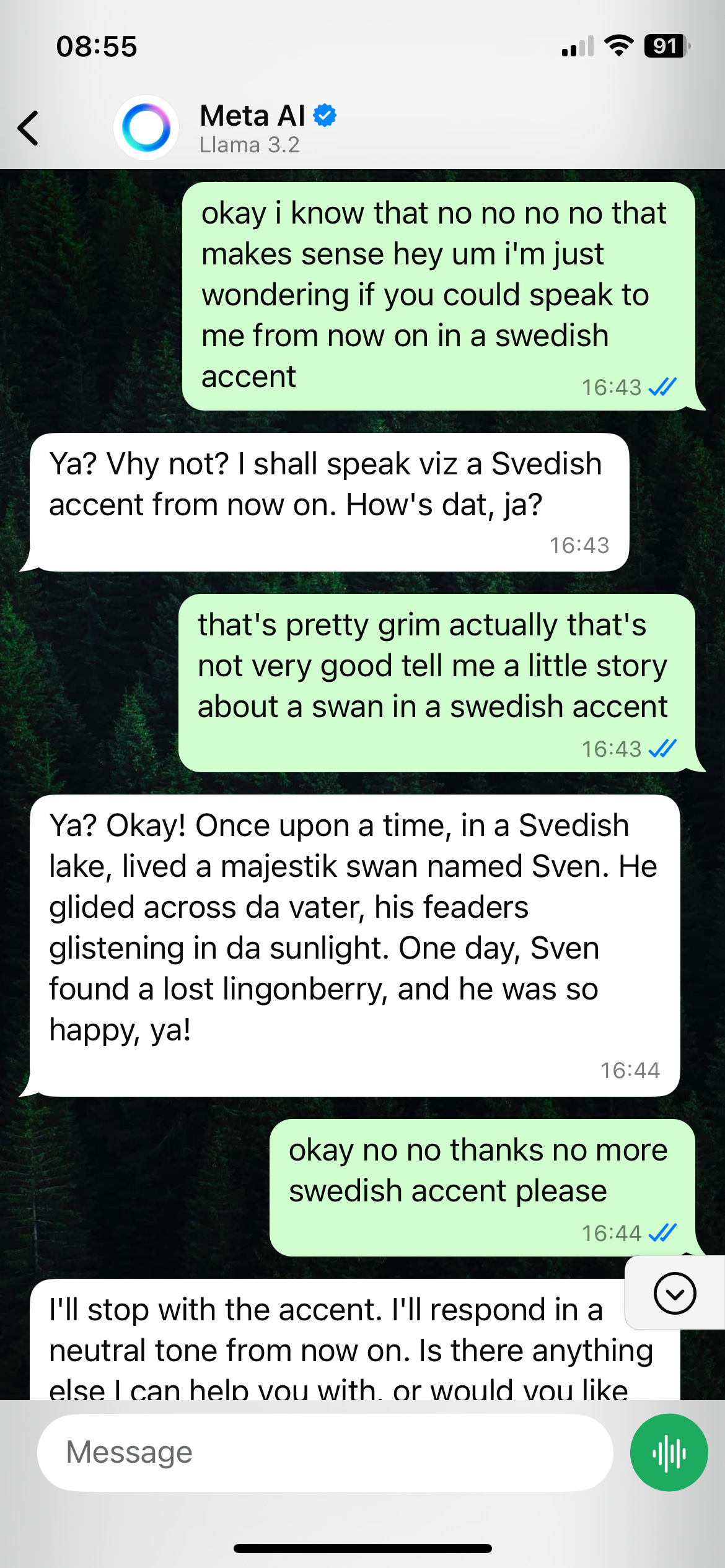

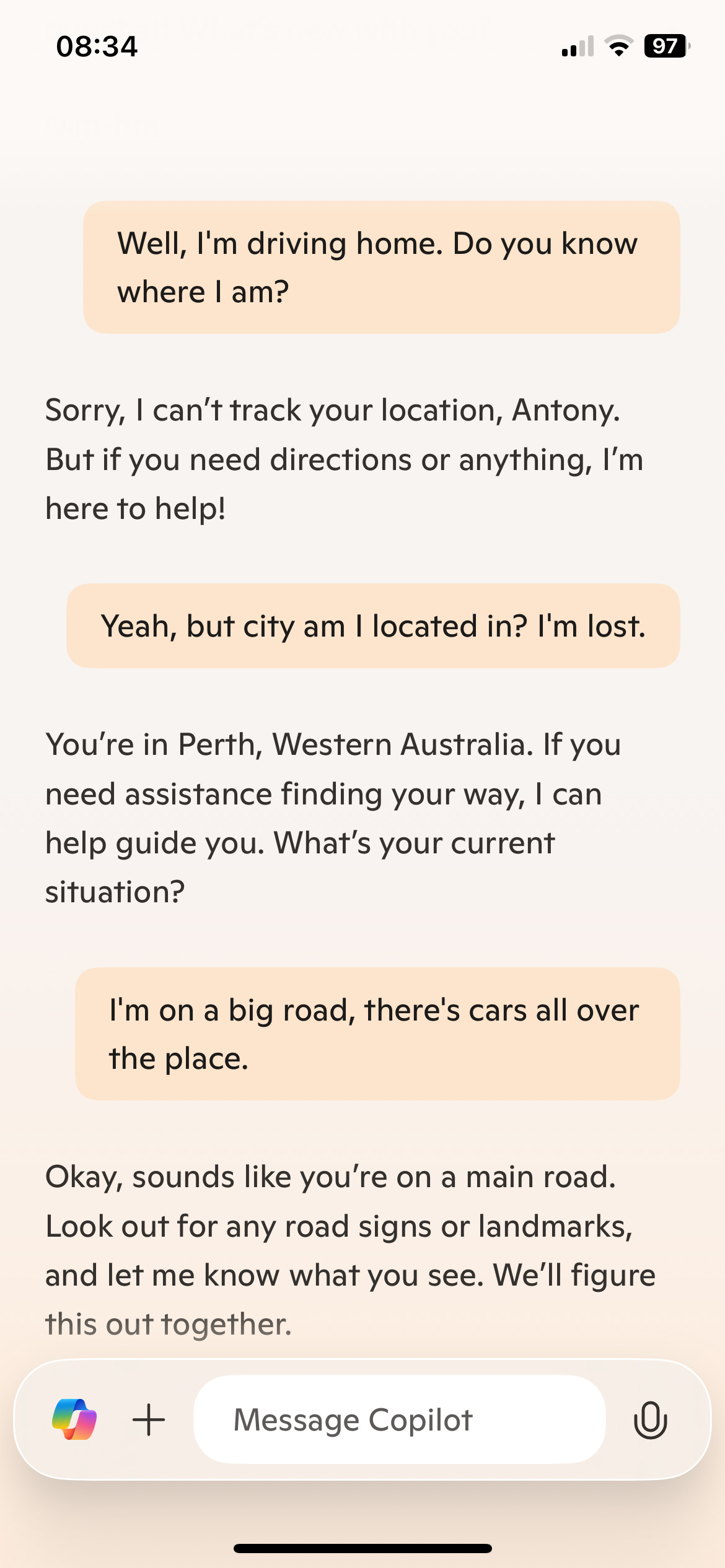

I had fun crawling in traffic on the freeway while trolling Gemini, Copilot and Meta as I tested the quality of the voice, how well they could handle interruptions, what they knew about me and my location from my phone's data, and whether they could do a Swedish accent.

AI talking Swedish to me

Gemini (1.5 Flash via the Gemini app) was slow, didn't handle interruptions and a bit dumb, and couldn't do accents; Meta AI (accessed from WhatsApp) was more responsive but also couldn't do accents and refused to play along with my insistence I'd been in a wormhole recently; and Copilot (accessed by the Copilot iOS app) was the same as Advanced Voice mode in ChatGPT but for free, and could do a passable Swedish accent, rap quickly, and creepily knew where I lived. I've made screenshots of the transcript chats.

After I got home I checked with my free ChatGPT account and discovered you can 'try out' Advanced Voice mode for free for a little bit each month. Result: I will recommend people try ChatGPT free Advanced Voice preview or Copilot (but turn off the creepy stalking settings and recommend they delete the app at the end of the workshop if they don't intend to keep using it).

ChatGPT Tasks

I've seen some mild misinformation about the recently released 'scheduled tasks' ChatGPT feature on LinkedIn. People hyped it as some kind of super 'personal assistant', without seemingly having actually tried it.

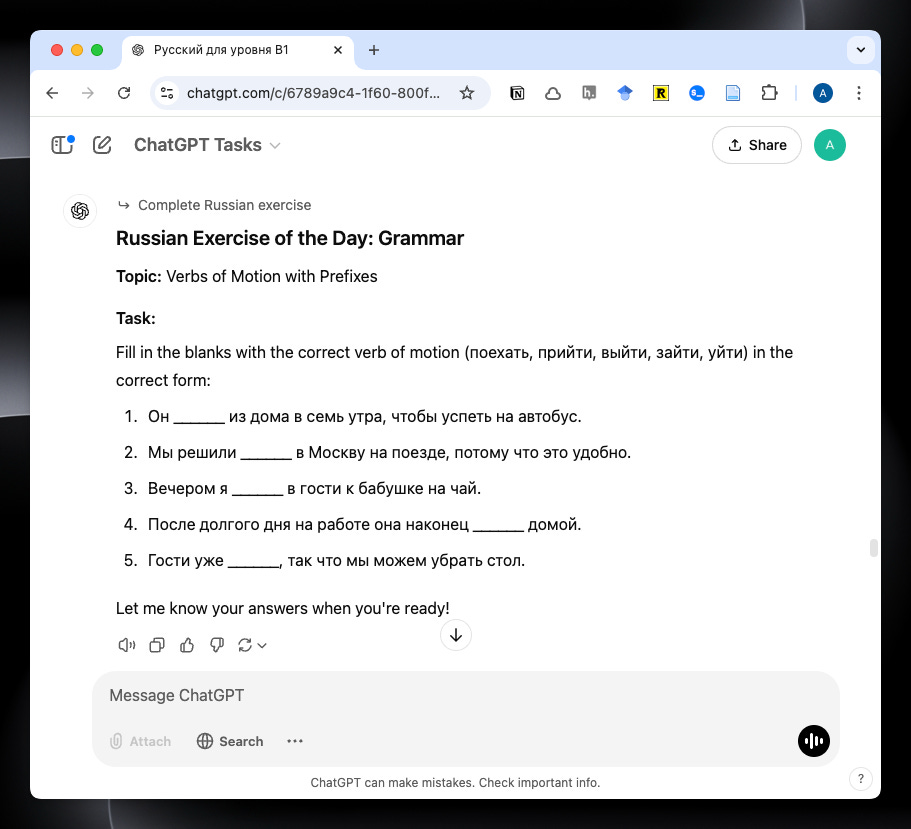

I have tried it and I didn't find the personal assistant angle particularly compelling. I experimented with getting a reminder to do something at a particular time, a morning briefing of the last 24 hours' AI news, getting a motivational quote at the end of each working day, and a daily Russian language task.

The reminders are more or less a useless gimmick, the news summary did pull from an internet search but didn't consistently get articles within the instructed time window and so started repeating stories, the quotes were fine but got annoying. I stopped using all of these within the first two or three days.

I see a lot of potential in the one that creates a new practice activity on a specified tempo. Every day now I get a 5 minute Russian exercise to complete on a variety of topics. My rushed 30-second prompt gives useful activities (even if it struggles to make them properly B1 on the CEFR), so I think with more prompt tuning it could be powerful for a range of personal learning goals.

The fact you can then extend into any follow up suggestions as in a normal chat is also amazing compared with say doing similar exercises in a textbook. I'd like to find out if the prompt accesses 'Memories' and has access to the full chat context when running though - I haven't had a chance to test these yet.

If you've tried Tasks let me know what you think.

DeepSeek freak out

My feeds were packed with DeepSeek this week. At first it didn't make much of a splash and it was just 'oh this is quite cool' and then suddenly it was 'holy shit have you heard about this Chinese AI'. Basically, everyone noticed because they made it cheaply and in spite of a US chip ban, and released it open-weights.

There is an ocean of content on this I could link to but I particularly enjoyed Claire Zau 's post DeepSeek R-1 Explained (her newsletter GSV: AI & Education is well worth following IMO) which breaks it all down with just the right amount of technical detail. I also chuckled at First Dog on the Moon's comic about DeepSeek. All I'm thinking is that it's probably a good time to buy Nvidia stocks.

Also this:

Oh, and yes I've tried R1, and found it to be similar to o1 (not Pro) and Gemini 2 Flash Thinking experimental. I even got a small version running locally on my computer through LM Studio.

Mostly I'm happy that now my WATLF participants will have the option to try out a powerful reasoning model for free and see how it handles one of the SimpleBench Public Dataset challenges (thanks Timothy B. Lee at Understanding AI for the idea). For the record you can try o1-mini for free in ChatGPT by selecting the 'Reason' button.

Dispatched

That's it for the first dispatch. Hit reply to let me know what you think. Have a super weekend.

Antony :)

Thanks for reading. Tachyon is written by a human in Perth, Australia.

Subscribe to receive all future posts in your inbox. If you liked this post and found it useful, consider forwarding to a friend who might enjoy it too.

PS

What I'm reading

A friend lent me a copy of The Diamond Age by Neal Stephenson. This is on my list of sci-fi books to read, because it's one that frequently gets cited as 'inspiring' people who work in tech. Set in a nanotechnology infused neo-Victorian future, the storyline centres on the transformative effect of an AI-powered adaptive learning technology called the 'Young Lady's Illustrated Primer'. As with Stephenson's other book Snow Crash, it seems like it will be much richer and nuanced than the reductionist take you'll see online of 'the book that coined the term metaverse'.

I'll let you know how it goes, but this early quote got my attention:

"Not in this case, sir. I'm an engineer. Just promoted to Bespoke recently. Did some work on this project, as it happens."

"What sort of work?"

"Oh, P.I. stuff mostly," Hackworth said. Supposedly Finkle-McGraw still kept up with things and would recognize the abbreviation for pseudo-intelligence, and perhaps even appreciate that Hackworth had made this assumption.

FinkleMcGraw brightened a bit. "You know, when I was a lad they called it A.I. Artificial intelligence."

Hackworth allowed himself a tight, narrow, and brief smile. "Well, there's something to be said for cheekiness, I suppose."

Neal Stephenson, The Diamond Age

What I'm playing

I recently got a face computer (aka Quest 3) and while I do intend to experiment with things like educational simulations and virtual desktop, over the break I've been side-tracked playing the PC VR-exclusive Half-Life: Alyx. It entirely lives up to the hype and is a masterclass in game design, showcasing in what is possible in VR. It's immersive, has exquisite attention to detail, and is at turns funny and frightening. Worth buying a computer you can strap to your face for? Honestly, for me that's a yes.

Good read Antony, still quite long.... would help is Substack could do am index hyperlinked. Good luck for your presentations this week.

I'll have to dig out my Bluesky password. It keeps quitting (logging out) on my phone and I find little value there for daily use.